Early Mechanical Devices: The First Steps in Calculation

Imagine you’re in ancient times, and you need to count a large number of items. How would you do it? This was the challenge that led to the creation of the abacus. Picture a frame with beads that slide on rods. By moving these beads, people could perform basic arithmetic quickly and accurately. This simple tool, invented around 2400 BCE, was the first step in our computing journey.

As time passed, inventors looked for ways to make calculations even easier. In 1642, Blaise Pascal created a machine that could add and subtract automatically. Think of it as a complex system of gears and wheels that would turn to show the result of a calculation. Later, in 1673, Gottfried Leibniz took this idea further. His “stepped reckoner” could not only add and subtract but also multiply! These machines were like early calculators, doing the hard work for us.

Babbage’s Analytical Engine: The Dream of a Mechanical Computer

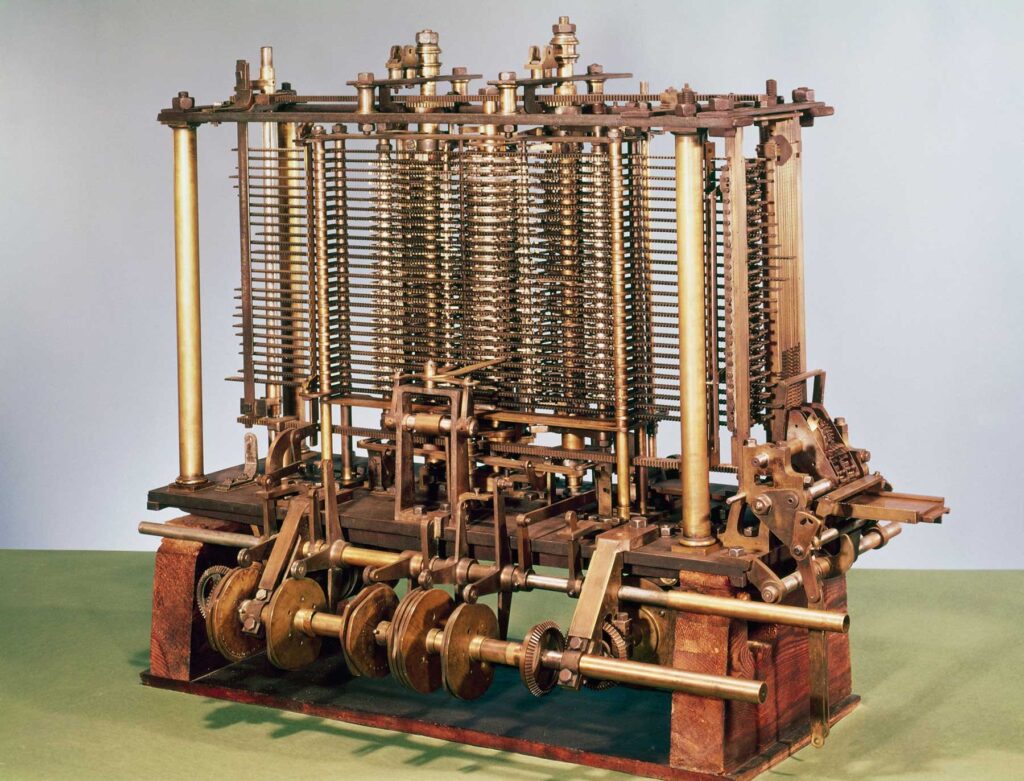

Now, let’s jump to 1837. Charles Babbage had a revolutionary idea: what if we could create a machine that could do more than just calculate? What if it could follow instructions and make decisions? This was the concept behind his Analytical Engine.

Imagine a massive machine made of gears, levers, and steam-powered components. It would have a “store” (like today’s computer memory) to hold numbers, a “mill” to perform calculations (like a modern CPU), and even a way to input instructions using punched cards (similar to early computer programming).

While Babbage’s machine was never fully built in his lifetime, his ideas were groundbreaking. He envisioned concepts like loops (repeating a set of instructions) and conditional branching (making decisions based on results), which are fundamental to how computers work today.

Electromechanical Computers: When Electricity Met Mechanics

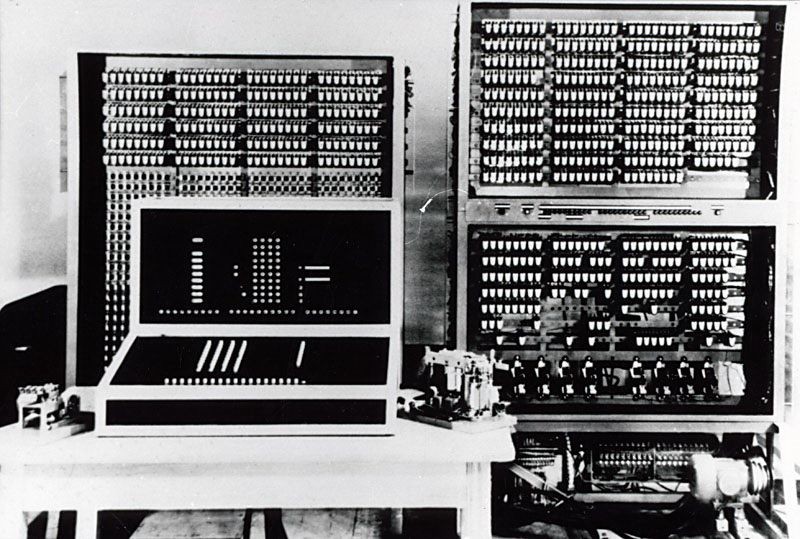

As we enter the 1930s and 1940s, a new player enters the scene: electricity. Inventors realized that by combining mechanical parts with electrical circuits, they could create more powerful and reliable computing machines.

One such machine was Konrad Zuse’s Z3, built in 1941. Picture a room-sized device with rows of electromechanical relays (switches controlled by electricity) working together to perform calculations. Another example was Harvard’s Mark I, completed in 1944. It used electrical signals to control its mechanical parts, allowing it to perform complex calculations for the U.S. Navy during World War II.

These machines were a crucial step forward. They showed that electricity could be used to control and enhance mechanical computing, paving the way for even more advanced systems.

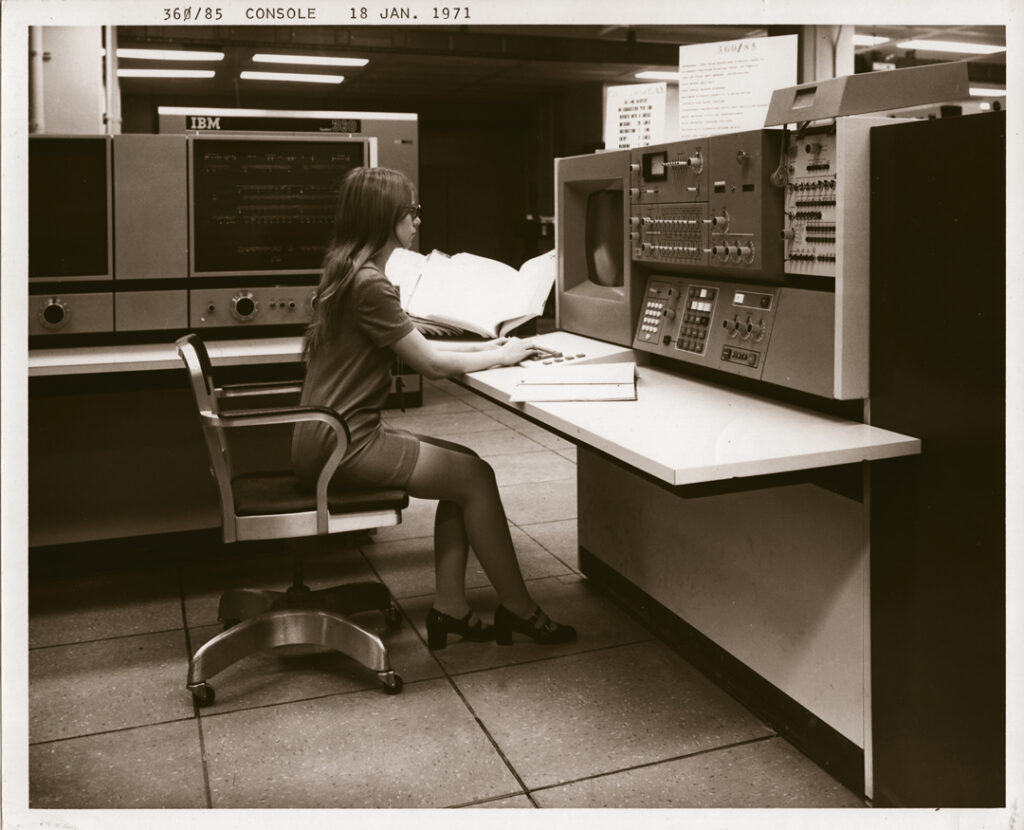

Electronic Computers: The Dawn of Modern Computing

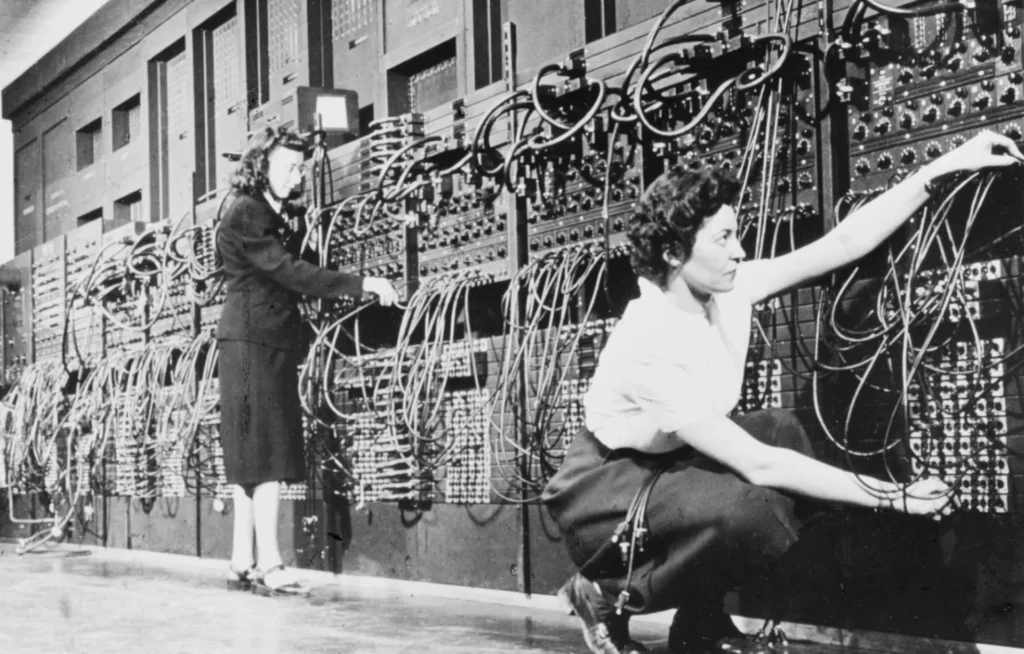

The next big leap came with fully electronic computers in the 1940s. The most famous of these was ENIAC (Electronic Numerical Integrator and Computer), unveiled in 1945.

Imagine a massive room filled with large metal cabinets, each packed with glowing vacuum tubes. These vacuum tubes, similar to old-fashioned light bulbs, could be used as fast electronic switches.

For the especially curious, here the details how they functioned as switches:

- Structure: A vacuum tube is a glass bulb with most of the air removed, containing a few metal components inside.

- Components: Inside the tube are typically three main parts:

- A cathode: a negatively charged electrode that emits electrons when heated

- An anode: a positively charged electrode that can attract electrons

- A control grid: a wire mesh between the cathode and anode

- How it works as a switch:

- When the control grid has no charge or a negative charge, it repels the electrons from the cathode, preventing them from reaching the anode. This is like an “off” state.

- When the control grid has a positive charge, it allows electrons to flow from the cathode to the anode. This is like an “on” state.

- By controlling the charge on the grid, the tube can rapidly switch between “on” and “off” states, acting as an electronic switch.

- Speed: These switches could change states thousands of times per second, much faster than any mechanical switch.

By using thousands of these tubes instead of mechanical parts, ENIAC could perform calculations thousands of times faster than its predecessors.

This was a revolutionary change. Computers were no longer limited by the speed of moving parts. They could now perform complex calculations in seconds that would have taken hours or days before. This marked the beginning of the modern computer age, where speed and electronic control became the new standard.

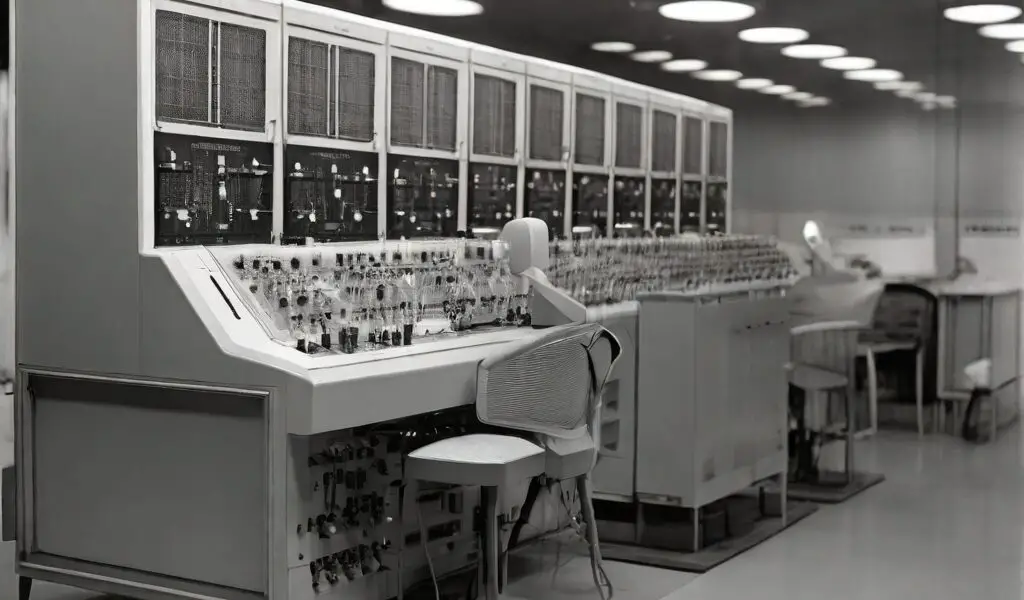

The Invention of the Transistor: Shrinking the Future

In 1947, a tiny device called the transistor was invented, and it would change everything. Imagine a component smaller than a fingernail that could do the job of a vacuum tube but better in every way.

The first computer to use transistors instead of vacuum tubes (light bulbs) was the TX-0 (Transistorized Experimental computer), developed at the Massachusetts Institute of Technology (MIT) in 1956.

Transistors were smaller, faster, and more reliable than vacuum tubes. They also used much less power and generated less heat. This meant that computers could become smaller, more powerful, and more efficient all at once.

The impact was enormous. Computers that once filled entire rooms could now fit into much smaller spaces. They became more reliable, as transistors were less likely to fail than fragile vacuum tubes. This innovation opened the door to a new generation of computers and laid the groundwork for the electronic devices we use today.

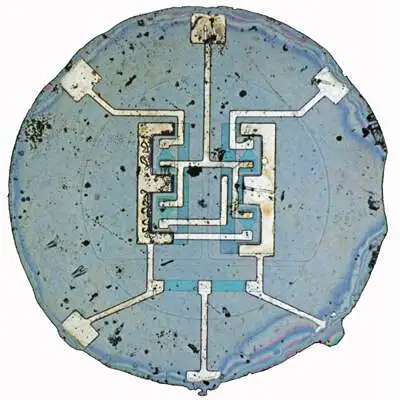

Integrated Circuits: Packing More Power into Tiny Spaces

The next big step came in 1958 with the invention of the integrated circuit (IC). Imagine taking multiple transistors and other electronic components and combining them onto a single, tiny chip of silicon.

This was a game-changer. Instead of assembling individual components one by one, manufacturers could now create entire circuits on a single chip. It’s like the difference between building a house brick by brick versus creating entire wall sections in a factory and assembling them on-site.

Integrated circuits made computers even smaller, faster, and more reliable. They also made computers cheaper to produce, as manufacturing a single chip was more efficient than assembling many individual components. This innovation paved the way for more complex and powerful computing systems, setting the stage for the personal computer revolution.

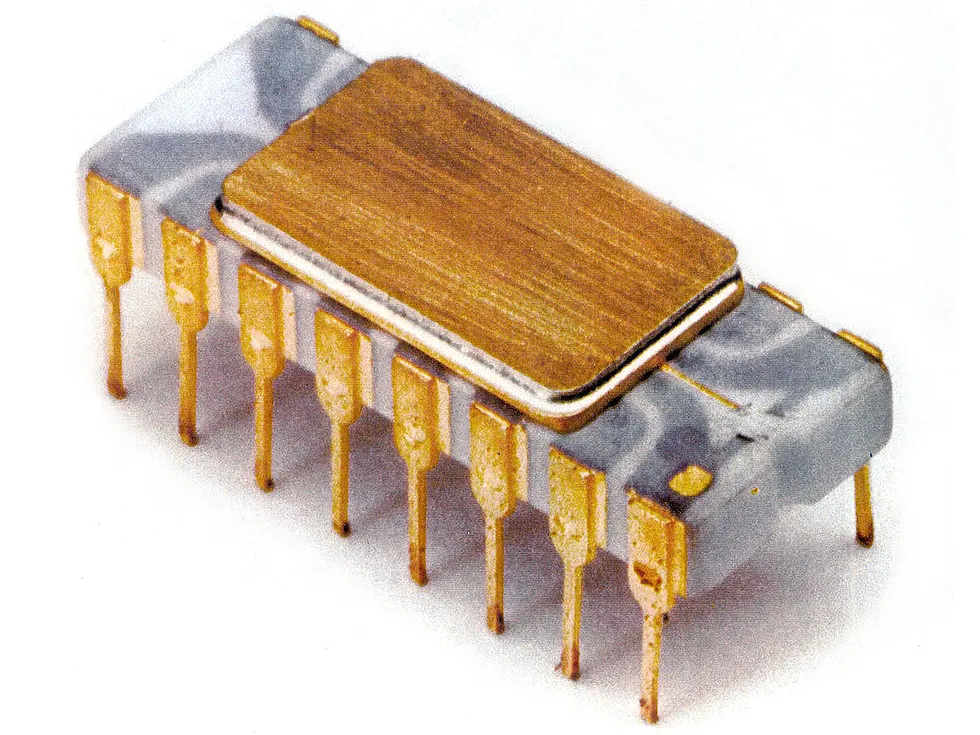

Microprocessors: The Computer’s Brain on a Chip

In 1971, another revolutionary step occurred: the creation of the microprocessor. Imagine taking the central processing unit (CPU) – the “brain” of a computer that performs all the calculations and controls – and shrinking it down to fit on a single chip.

The first microprocessor, Intel’s 4004, was a marvel of engineering. It packed the computing power that once required room-sized machines into a chip the size of a fingernail. This tiny powerhouse could perform thousands of calculations per second.

The impact of microprocessors was immense. They dramatically reduced the size and cost of computers while increasing their power. This innovation made it possible to put computer technology into smaller devices, setting the stage for personal computers, and later, smartphones and other portable devices.

Personal Computers: Computing Comes Home

The microprocessor revolution led directly to the era of personal computers in the 1970s and 1980s. For the first time, individuals could own and use powerful computing machines in their homes and offices.

Early models like the Altair 8800 (1975) were still largely for hobbyists, requiring assembly and programming knowledge. But soon, more user-friendly computers like the Apple II (1977) and the IBM PC (1981) arrived. These machines came with keyboards, monitors, and eventually, software that anyone could learn to use.

This was a democratizing moment in computing history. Tasks that once required access to large, expensive machines in universities or businesses could now be done at home. Word processing, spreadsheets, and computer games became part of everyday life for many people.

Graphical User Interfaces: Making Computers User-Friendly

As personal computers became more common, the next challenge was making them easier to use. Enter the graphical user interface (GUI) in the 1980s and 1990s.

Before GUIs, using a computer often meant typing commands in a text-based interface. Imagine having to remember and type specific commands for every task you wanted to perform. GUIs changed this by representing commands and files as visual icons and windows that could be manipulated with a mouse.

Systems like the Apple Macintosh (1984) and Microsoft Windows (1985) brought this user-friendly interface to the masses. Suddenly, you could point and click to open programs, move files, or delete items. This made computers accessible to a much wider audience, as users no longer needed to memorize complex commands.

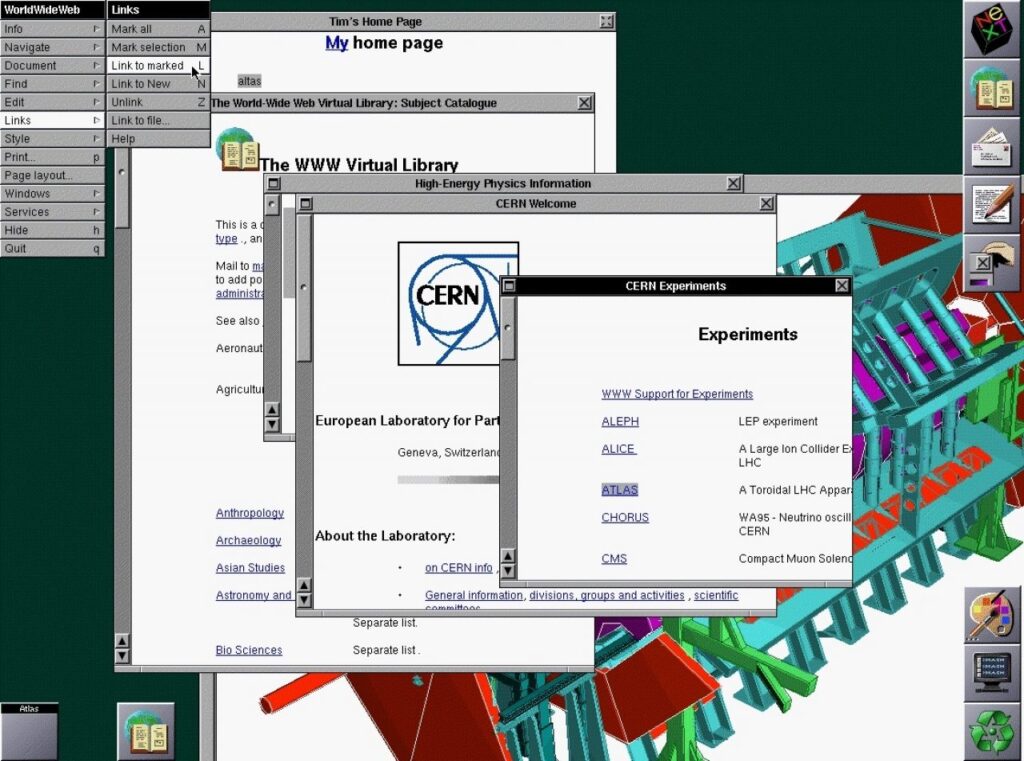

The Internet and World Wide Web: Connecting the World

The next transformative step was the widespread adoption of the Internet and the creation of the World Wide Web in the 1990s. While the Internet had existed in various forms since the 1960s, it was the Web that made it accessible and useful for everyday people.

Imagine a vast network connecting computers all over the world, allowing them to share information instantly. The World Wide Web provided an easy way to navigate this network using hyperlinks and web browsers.

This World Wide Web (WWW) was created by Sir Tim Berners-Lee in 1989 while he was working at CERN (the European Organization for Nuclear Research). He wrote the first web browser, WorldWideWeb, and the first web server, httpd, in 1990. The web became publicly accessible in August 1991.

This development transformed computers from standalone devices into gateways to a world of information. Email, websites, and later, social media, changed how we communicate, work, and access information. The Internet became a platform for commerce, education, entertainment, and global communication.

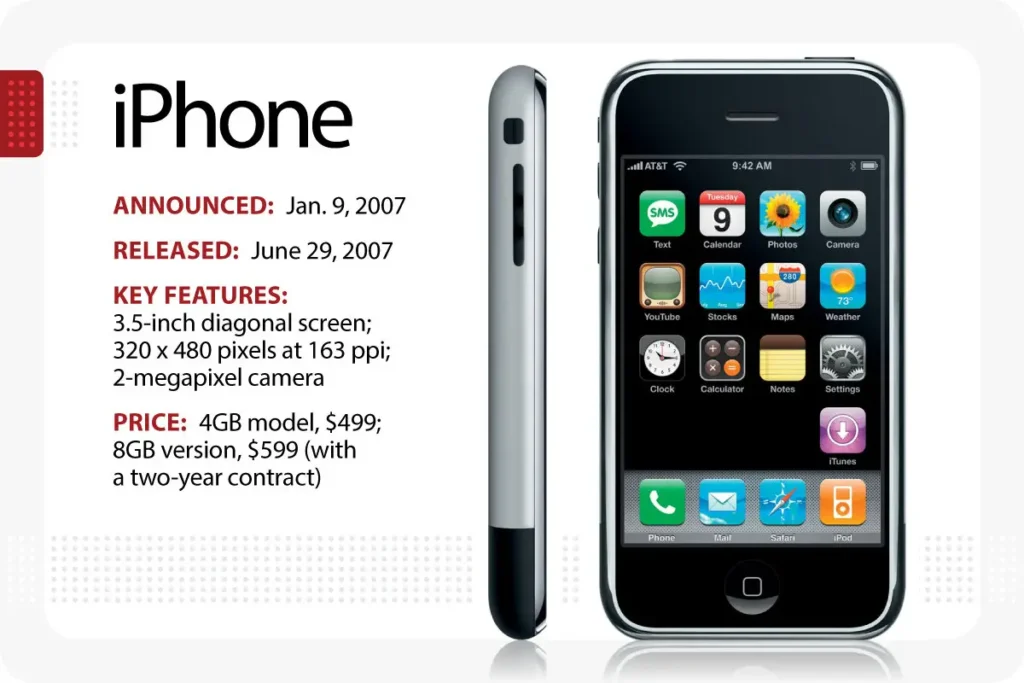

Mobile Computing and Smartphones: Computers in Our Pockets

As computers became smaller and more powerful, the next logical step was to make them portable. The era of mobile computing, epitomized by smartphones, began in earnest in the 2000s.

Devices like the iPhone, introduced in 2007, were a quantum leap forward. They combined the functions of a computer, a phone, and other devices into a single, pocket-sized package. With touchscreens and intuitive interfaces, these devices made powerful computing and internet access available literally at our fingertips.

This shift had a profound impact on how we interact with technology and each other. Suddenly, we had access to vast amounts of information, communication tools, and useful applications wherever we went. Mobile apps expanded the capabilities of these devices, turning them into tools for navigation, health tracking, mobile banking, and much more.

Cloud Computing: Infinite Resources in the Sky

As internet speeds increased and data centers grew more powerful, a new model of computing emerged in the 2010s: cloud computing. Imagine being able to access vast computing resources – storage, processing power, and software – over the internet, without needing powerful hardware of your own.

Services like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud made this a reality. Instead of storing all your files on your personal computer or running complex software locally, you could now do these things “in the cloud” – on remote servers accessed via the internet.

This shift had significant implications. Businesses could access powerful computing resources without massive upfront investments. Individuals could store and access their data from any device. Software could be delivered as a service over the internet, leading to the rise of web-based applications for everything from office work to photo editing.

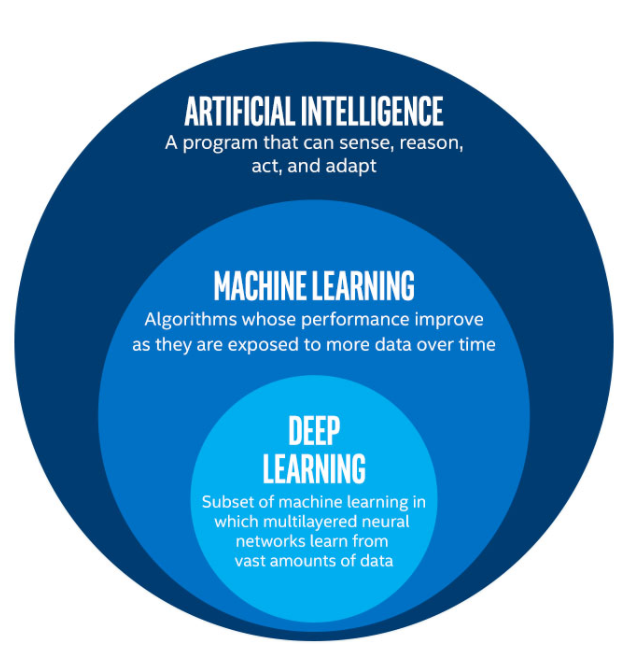

Artificial Intelligence and Machine Learning: Computers That Learn

Building on all the previous advancements, artificial intelligence (AI) and machine learning have become central to modern computing in the 2020s. These technologies enable computers to learn from data and perform complex tasks that once seemed possible only for humans.

Imagine a computer system that can analyze millions of images and learn to recognize specific objects or faces. Or a program that can understand and translate human languages, or even generate human-like text. These are just a few examples of what AI and machine learning can do.

These technologies are being applied in various fields, from healthcare (analyzing medical images) to finance (detecting fraudulent transactions) to personal assistants like Siri or Alexa. They represent a shift from computers that simply follow pre-programmed instructions to systems that can learn, adapt, and make decisions based on data.

Quantum Computing: The Next Frontier

As we look to the future, quantum computing emerges as a potential next leap in computing power. While still in early stages of development, quantum computers promise to solve certain types of problems exponentially faster than classical computers.

Quantum computers work on principles of quantum mechanics, using quantum bits or “qubits” instead of classical bits. Imagine a computer that can perform multiple calculations simultaneously, exploring many possible solutions to a problem at once.

If successfully developed, quantum computers could revolutionize fields like cryptography, drug discovery, and complex system simulation. They represent the cutting edge of computing research and may open doors to solving problems that are currently beyond our reach.

This journey from the abacus to quantum computing showcases human ingenuity and our constant drive to push the boundaries of what’s possible. Each step built upon the last, solving existing problems while creating new possibilities. As we continue this journey, who knows what amazing innovations the future of computing will bring?